Notes - Pages 13-25

Statement mutual independence

All variables read is its Input Set I(S), all written to Output Set O(S), two statements are independent if intersection of their output sets are the empty set, I(S_j) intersection O (S_i) is empty set, and I(S_i) intersection O(S_j) is empty set... these three properties 'output independence', 'flow independence', and 'anti independence' these are known as Bernsteins Conditions

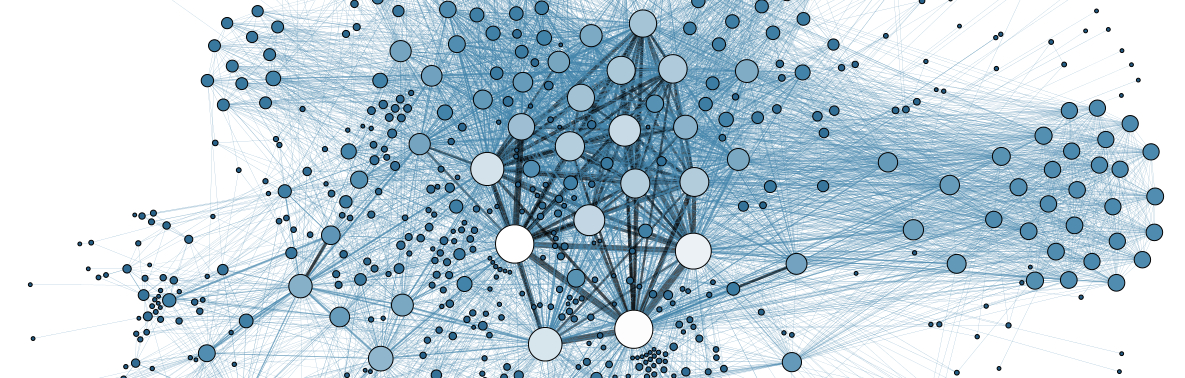

Parallelism is commutative but not tranisitive (18), i.e. statement independence is a N^2 check. The section read had architecture graphs, psuedocode definitions, primitves, and conditions that make paralizing statements possible, where statements can be any unit of computation...